- TRANSDUCERS

- TRANSDUCERS

- BASIC COMPONENTS DK

- BASIC COMPONENTS DK

- MARKETPLACE

- MARKETPLACE

- DEVELOPMENT BOARDS & KITS

- DEVELOPMENT BOARDS & KITS

- CABLE ASSEMBLIES

- CABLE ASSEMBLIES

- RF AND WIRELESS

- RF AND WIRELESS

- BOXES ENCLOSURES RACKS

- BOXES ENCLOSURES RACKS

- AUDIO PRODUCTS

- AUDIO PRODUCTS

- FANS-BLOWERS-THERMAL MANAGEMENT

- FANS-BLOWERS-THERMAL MANAGEMENT

- WIRELESS MODULES

- WIRELESS MODULES

- TERMINALS

- TERMINALS

- Cables/Wires

- Cables/Wires

- SINGLE BOARD COMPUTER

- SINGLE BOARD COMPUTER

- BREAKOUT BOARDS

- BREAKOUT BOARDS

- LED

- LED

- TEST AND MEASUREMENT

- TEST AND MEASUREMENT

- POTENTIONMETERS AND VARIABLE RESISTORS

- POTENTIONMETERS AND VARIABLE RESISTORS

- DEVELOPMENT BOARDS AND IC's

- DEVELOPMENT BOARDS AND IC's

- EMBEDDED COMPUTERS

- EMBEDDED COMPUTERS

- OPTOELECTRONICS

- OPTOELECTRONICS

- INDUSTRAL AUTOMATION AND CONTROL

- INDUSTRAL AUTOMATION AND CONTROL

- COMPUTER EQUIPMENT

- COMPUTER EQUIPMENT

- CONNECTORS & INTERCONNECTS

- CONNECTORS & INTERCONNECTS

- MAKER/DIY EDUCATIONAL

- MAKER/DIY EDUCATIONAL

- TOOLS

- TOOLS

- MOTORS/ACTUATORS/SOLEENOIDS/DRIVERS

- MOTORS/ACTUATORS/SOLEENOIDS/DRIVERS

- FPGA HARDWARE

- FPGA HARDWARE

- ROBOTICS & AUTOMATION

- ROBOTICS & AUTOMATION

Description

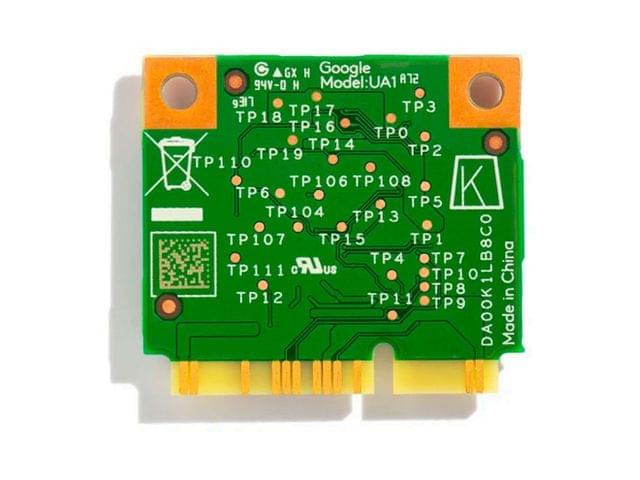

The Coral Mini PCIe Accelerator is a PCIe module that brings the Edge TPU coprocessor to existing systems and products.

The Edge TPU is a small ASIC designed by Google that provides high performance ML inferencing with low power requirements: it's capable of performing 4 trillion operations (tera-operations) per second (TOPS), using 0.5 watts for each TOPS (2 TOPS per watt). For example, it can execute state-of-the-art mobile vision models such as MobileNet v2 at almost 400 FPS, in a power efficient manner. This on-device processing reduces latency, increases data privacy, and removes the need for constant high-bandwidth connectivity.

The Mini PCIe Accelerator is a half-size Mini PCIe card designed to fit in any standard Mini PCIe slot. This form-factor enables easy integration into ARM and x86 platforms so you can add local ML acceleration to products such as embedded platforms, mini-PCs, and industrial gateways.

Key Features

- Performs high-speed ML inferencing: The on-board Edge TPU coprocessor is capable of performing 4 trillion operations (tera-operations) per second (TOPS), using 0.5 watts for each TOPS (2 TOPS per watt). For example, it can execute state-of-the-art mobile vision models such as MobileNet v2 at 400 FPS, in a power efficient manner.

- Works with Debian Linux: Integrates with any Debian-based Linux system with a compatible card module slot.

- Supports TensorFlow Lite: No need to build models from the ground up. TensorFlow Lite models can be compiled to run on the Edge TPU.

- Supports AutoML Vision Edge: Easily build and deploy fast, high-accuracy custom image classification models to your device with AutoML Vision Edge.

- Home

- Coral Mini PCIe Accelerator

Coral Mini PCIe Accelerator

SIZE GUIDE

- Shipping in 10-12 Working days

- http://cdn.storehippo.com/s/59c9e4669bd3e7c70c5f5e6c/ms.products/5e4e1b63ce8f5c037b81b23c/images/5e4e1b63ce8f5c037b81b23d/5e4e1ae1c819aa5b2b06496b/5e4e1ae1c819aa5b2b06496b.jpg

Description of product

Description

The Coral Mini PCIe Accelerator is a PCIe module that brings the Edge TPU coprocessor to existing systems and products.

The Edge TPU is a small ASIC designed by Google that provides high performance ML inferencing with low power requirements: it's capable of performing 4 trillion operations (tera-operations) per second (TOPS), using 0.5 watts for each TOPS (2 TOPS per watt). For example, it can execute state-of-the-art mobile vision models such as MobileNet v2 at almost 400 FPS, in a power efficient manner. This on-device processing reduces latency, increases data privacy, and removes the need for constant high-bandwidth connectivity.

The Mini PCIe Accelerator is a half-size Mini PCIe card designed to fit in any standard Mini PCIe slot. This form-factor enables easy integration into ARM and x86 platforms so you can add local ML acceleration to products such as embedded platforms, mini-PCs, and industrial gateways.

Key Features

- Performs high-speed ML inferencing: The on-board Edge TPU coprocessor is capable of performing 4 trillion operations (tera-operations) per second (TOPS), using 0.5 watts for each TOPS (2 TOPS per watt). For example, it can execute state-of-the-art mobile vision models such as MobileNet v2 at 400 FPS, in a power efficient manner.

- Works with Debian Linux: Integrates with any Debian-based Linux system with a compatible card module slot.

- Supports TensorFlow Lite: No need to build models from the ground up. TensorFlow Lite models can be compiled to run on the Edge TPU.

- Supports AutoML Vision Edge: Easily build and deploy fast, high-accuracy custom image classification models to your device with AutoML Vision Edge.

NEWSLETTER

Subscribe to get Email Updates!

Thanks for subscribe.

Your response has been recorded.

INFORMATION

ACCOUNT

ADDRESS

Tenet Technetronics# 2514/U, 7th 'A' Main Road, Opp. to BBMP Swimming Pool, Hampinagar, Vijayanagar 2nd Stage.

Bangalore

Karnataka - 560104

IN

Tenet Technetronics focuses on “Simplifying Technology for Life” and has been striving to deliver the same from the day of its inception since 2007. Founded by young set of graduates with guidance from ardent professionals and academicians the company focuses on delivering high quality products to its customers at the right cost considering the support and lifelong engagement with customers. “We don’t believe in a sell and forget model “and concentrate and building relationships with customers that accelerates, enhances as well as provides excellence in their next exciting project.